SAIA

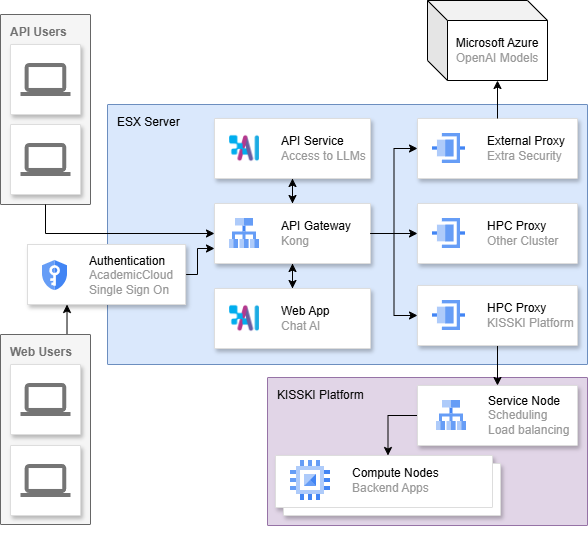

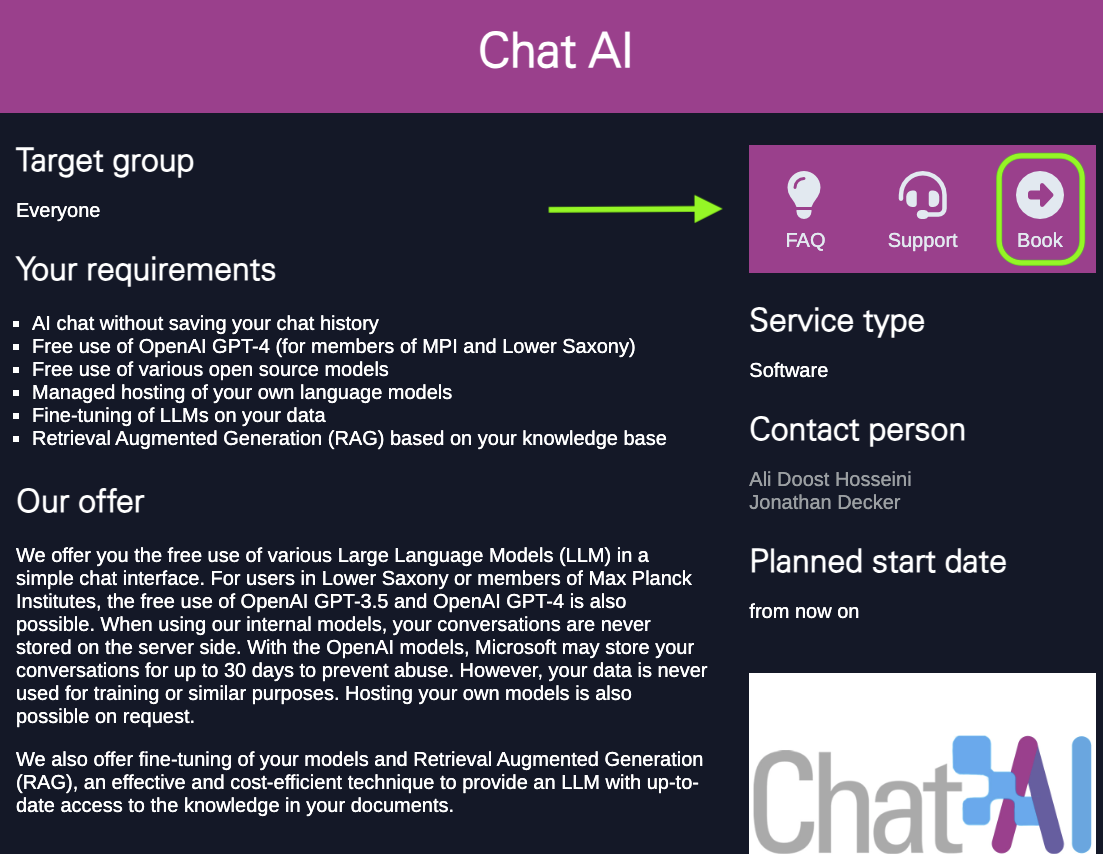

SAIA is the Scalable Artificial Intelligence (AI) Accelerator that hosts our AI services. Such services include Chat AI and CoCo AI, with more to be added soon. SAIA API (application programming interface) keys can be requested and used to access the services from within your code.

API keys are not necessary to use the Chat AI web interface.

The SAIA API is suitable for interactive inference scenarios. If you have a large amount (eg. thousands of LLM queries) of requests that you can process asynchronously, the batch paradigm of our HPC cluster is the better choice. Your batch will be completed more predictably, in less time, and with lower cost. Check out how to get started with our HPC cluster and then running LLMs to learn how you can setup up a batch inference job on the cluster. vLLM is another popular choice for LLM inference.

API Request

If a user has an API key, they can use the available models from within their terminal or python scripts. To get access to an API key, go to the KISSKI LLM Service page and click on “Book”. There you will find a form to fill out with your credentials and intentions with the API key. Please use the same email address as is assigned to your AcademicCloud account. Once received, DO NOT share your API key with other users!

API Usage

The API service is compatible with the OpenAI API standard. We provide the following endpoints:

/chat/completions/completions/embeddings/models/documents

API Minimal Example

You can use your API key to access Chat AI directly from your terminal. Here is an example of how to do text completion with the API.

curl -i -X POST \

--url https://chat-ai.academiccloud.de/v1/chat/completions \

--header 'Accept: application/json' \

--header 'Authorization: Bearer <api_key>' \

--header 'Content-Type: application/json'\

--data '{

"model": "meta-llama-3.1-8b-instruct",

"messages":[{"role":"system","content":"You are an assistant."},{"role":"user","content":"What is the weather today?"}],

"max_tokens": 7,

"temperature": 0.5,

"top_p": 0.5

}'Ensure to replace <api_key> with your own API key.

API Model Names

For more information on the respective models see the model list.

| Model Name | Capabilities |

|---|---|

| apertus-70b-instruct-2509 | text |

| devstral-2-123b-instruct-2512 | coding |

| deepseek-r1-distill-llama-70b | reasoning |

| gemma-3-27b-it | text, image |

| glm-4.7 | text |

| internvl3.5-30b-a3b | text, image |

| llama-3.1-sauerkrautlm-70b-instruct | text, arcana |

| medgemma-27b-it | text, image |

| meta-llama-3.1-8b-instruct | text |

| llama-3.3-70b-instruct | text |

| mistral-large-3-675b-instruct-2512 | text, image |

| openai-gpt-oss-120b | text |

| qwen3-235b-a22b | reasoning |

| qwen3-30b-a3b-instruct-2507 | text |

| qwen3-30b-a3b-thinking-2507 | text |

| qwen3-32b | text |

| qwen3-coder-30b-a3b-instruct | text, code |

| qwen3-omni-30b-a3b-instruct | text, omni |

| qwen3-vl-30b-a3b-instruct | text, image |

| teuken-7b-instruct-research | text |

| e5-mistral-7b-instruct | embeddings |

| multilingual-e5-large-instruct | embeddings |

| qwen3-embedding-4b | embeddings |

A complete up-to-date list of available models can be retrieved via the following command:

curl -X POST \

--url https://chat-ai.academiccloud.de/v1/models \

--header 'Accept: application/json' \

--header 'Authorization: Bearer <api_key>' \

--header 'Content-Type: application/json'API Usage Examples

The OpenAI (external) models are not generally available for API usage. For configuring your own requests in greater detail, such as setting the frequency_penalty,seed,max_tokens and more, refer to the openai API reference page.

Chat

It is possible to import an entire conversation into your command. This conversation can be from a previous session with the same model or another, or between you and a friend/colleague if you would like to ask them more questions (just be sure to update your system prompt to say “You are a friend/colleague trying to explain something you said that was confusing”).

curl -i -N -X POST \

--url https://chat-ai.academiccloud.de/v1/chat/completions \

--header 'Accept: application/json' \

--header 'Authorization: Bearer <api_key>' \

--header 'Content-Type: application/json'\

--data '{

"model": "meta-llama-3.1-8b-instruct",

"messages": [{"role":"system","content":"You are a helpful assistant"},{"role":"user","content":"How tall is the Eiffel tower?"},{"role":"assistant","content":"The Eiffel Tower stands at a height of 324 meters (1,063 feet) above ground level. However, if you include the radio antenna on top, the total height is 330 meters (1,083 feet)."},{"role":"user","content":"Are there restaurants?"}],

"temperature": 0

}'For ease of usage, you can access the Chat AI models by executing a Python file, for example, by pasting the below code into the file.

from openai import OpenAI

# API configuration

api_key = '<api_key>' # Replace with your API key

base_url = "https://chat-ai.academiccloud.de/v1"

model = "meta-llama-3.1-8b-instruct" # Choose any available model

# Start OpenAI client

client = OpenAI(

api_key = api_key,

base_url = base_url

)

# Get response

chat_completion = client.chat.completions.create(

messages=[{"role":"system","content":"You are a helpful assistant"},{"role":"user","content":"How tall is the Eiffel tower?"},{"role":"assistant","content":"The Eiffel Tower stands at a height of 324 meters (1,063 feet) above ground level. However, if you include the radio antenna on top, the total height is 330 meters (1,083 feet)."},{"role":"user","content":"Are there restaurants?"}],

model= model,

)

# Print full response as JSON

print(chat_completion) # You can extract the response text from the JSON objectIn certain cases, a long response can be expected from the model, which may take long with the above method, since the entire response gets generated first and then printed to the screen. Streaming could be used instead to retrieve the response proactively as it is being generated.

from openai import OpenAI

# API configuration

api_key = '<api_key>' # Replace with your API key

base_url = "https://chat-ai.academiccloud.de/v1"

model = "meta-llama-3.1-8b-instruct" # Choose any available model

# Start OpenAI client

client = OpenAI(

api_key = api_key,

base_url = base_url

)

# Get stream

stream = client.chat.completions.create(

messages=[

{

"role": "user",

"content": "Name the capital city of each country on earth, and describe its main attraction",

}

],

model = model ,

stream = True

)

# Print out the response

for chunk in stream:

print(chunk.choices[0].delta.content or "", end="")If you use Visual Studio Code or Jetbrains as your IDE, the recommended way to maximise your API key ease of usage, particularly for code completion, is to install the Continue plugin and set the configurations accordingly. Refer to CoCo AI for further details.

Azure API

Some of our customers may come in contact with the Azure OpenAI API.

This API is compatible with the OpenAI API, barring minor differences in the JSON responses and the endpoint handling. The official OpenAI Python client offers an AzureOpenAI client to account for these differences.

In SAIA, as the external, non open-weight models are obtained from Microsoft Azure, we created a translation layer to ensure OpenAI compatibility of the Azure models.

List of known differences:

- Addition of

content_filter_resultsin the responses of Azure models.

Image

The API specification is compatible with the OpenAI Image API. However, fetching images from the web is not supported and must be uploaded as part of the requests.

See the following minimal example in Python.

import base64

from openai import OpenAI

# API configuration

api_key = '<api_key>' # Replace with your API key

base_url = "https://chat-ai.academiccloud.de/v1"

model = "internvl3.5-30b-a3b" # Choose any available model

# Start OpenAI client

client = OpenAI(

api_key = api_key,

base_url = base_url,

)

# Function to encode the image

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

# Path to your image

image_path = "test-image.png"

# Getting the base64 string

base64_image = encode_image(image_path)

response = client.chat.completions.create(

model = model,

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "What is in this image?",

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

},

},

],

}

],

)

print(response.choices[0])Text to Image

curl -i -N -X POST \

--url https://chat-ai.academiccloud.de/v1/images/generations \

--header 'Accept: application/json' \

--header 'Authorization: Bearer <key>' \

--header 'Content-Type: application/json' \

--data '{

"prompt": "flower",

"response_format": "b64_json",

"model": "flux",

"size": "1024x1024",

"n": 1,

"quality": "standard"

}'Replace <key> with the key provided by GWDG. This curl command uses Flux.1-schnell as its backend model.

Image to Image

curl https://chat-ai.academiccloud.de/v1/images/edits/ \

-H "Authorization: Bearer <key>" \

-H "Content-Type: multipart/form-data" \

-H "inference-service: image-edit-2511" \

-F "prompt=make style to Van-Gogh" \

-F "image=@./<img.png or jpg>" \

-o "edited_output.png"Replace <key> with the key provided by GWDG, and <img> with your image. This curl command uses Qwen-Image-Edit-2511 as its backend model.

Voice to Text

curl -i 'https://saia.gwdg.de/v1/audio/<translations or transcriptions>' \

--header 'Accept: /' \

--header 'Authorization: Bearer <key>' \

-H "Content-Type: multipart/form-data"\

-F model="whisper-large-v2" \

-F "file=@./<voice.wav, mp4, mp3 or flac>" \

-F response_format=<vtt or text or srt>Replace <key> with the key provided by GWDG, choose between transcriptions or translations, srt or vtt or text, and your audio file. This curl command uses whisper-large-v2 as its backend model.

Embeddings

Embeddings are only available via the API and support the same API as the OpenAI Embeddings API.

See the following minimal example.

curl https://chat-ai.academiccloud.de/v1/embeddings \

-H "Authorization: Bearer <api_key>" \

-H "Content-Type: application/json" \

-d '{

"input": "The food was delicious and the waiter...",

"model": "e5-mistral-7b-instruct",

"encoding_format": "float"

}'See the following code example for developing RAG applications with llamaindex: https://gitlab-ce.gwdg.de/hpc-team-public/chat-ai-llamaindex-examples

RAG/Arcanas

Arcanas are also accessible via the API interface. A minimal example using curl is this one:

curl -i -X POST \

--url https://chat-ai.academiccloud.de/v1/chat/completions \

--header 'Accept: application/json' \

--header 'Authorization: Bearer <api_key>' \

--header 'Content-Type: application/json'\

--header 'inference-service: saia-openai-gateway'\

--data '{

"model": "qwen3-30b-a3b-instruct-2507",

"messages":[{"role":"system","content":"You are an assistant."},{"role":"user","content":"What is Chat-Ai?"}],

"enable-tools": true,

"arcana" : {

"id": "<the Arcana ID>"

},

"temperature": 0.0,

"top_p": 0.05

}'Docling

SAIA provides Docling as a service via the API interface on this endpoint:

https://chat-ai.academiccloud.de/v1/documentsA minimal example using curl is:

curl -X POST "https://chat-ai.academiccloud.de/v1/documents/convert" \

-H "accept: application/json" \

-H 'Authorization: Bearer <api_key>' \

-H "Content-Type: multipart/form-data" \

-F "document=@/path/to/your/file.pdf"The result is a JSON response like:

{

"response_type": "MARKDOWN",

"filename": "example_document",

"images": [

{

"type": "picture",

"filename": "image1.png",

"image": "data:image/png;base64, xxxxxxx..."

},

{

"type": "table",

"filename": "table1.png",

"image": "data:image/png;base64, xxxxxxx..."

}

],

"markdown": "#Your Markdown File",

}To extract only the “markdown” field from the response, you can use the jq tool in the command line (can be installed with sudo apt install jq). You can also store the output in a file by simply appending > <output-file-name> to the command.

Here is an example to convert a PDF file to markdown and store it in output.md:

curl -X POST "https://chat-ai.academiccloud.de/v1/documents/convert" \

-H "accept: application/json" \

-H 'Authorization: Bearer <api_key>' \

-H "Content-Type: multipart/form-data" \

-F "document=@/path/to/your/file.pdf" \

| jq -r '.markdown' \

> output.mdYou can use advanced settings in your request by adding query parameters:

| Parameter | Values | Description |

|---|---|---|

response_type | markdown, html, json or tokens | The output file type |

extract_tables_as_images | true or false | Whether tables should be returned as images |

image_resolution_scale | 1, 2, 3, 4 | Scaling factor for image resolution |

For example, in order to extract tables as images, scale image resolution by 4, and convert to HTML, you can call:

https://chat-ai.academiccloud.de/v1/documents/convert?response_type=json&extract_tables_as_images=false&image_resolution_scale=4which will result in an output like:

{

"response_type": "HTML",

"filename": "example_document",

"images": [

...

],

"html": "#Your HTML data",

}API Limits

You can check your current API usage limits and remaining quota directly from the HTTP response headers. Run the following command (replace <your-API-Key> with your actual key, and adjust the endpoint as needed):

curl -i -H "Authorization: Bearer <your-API-Key>" \

-H "Content-Type: application/json" \

https://saia.gwdg.de/v1/chat/completionsThe response includes both the body and the rate-limit headers. Example output:

HTTP/2 400

content-type: application/json

content-length: 43

x-ratelimit-limit-minute: 1000

x-ratelimit-limit-hour: 10000

x-ratelimit-limit-day: 50002

x-ratelimit-remaining-minute: 999

x-ratelimit-remaining-hour: 9999

x-ratelimit-remaining-day: 50001

ratelimit-limit: 1000

ratelimit-remaining: 999

ratelimit-reset: 1

date: Mon, 20 Oct 2025 10:13:58 GMT

server: uvicorn

via: kong/3.6.1Interpreting the Headers

X-RateLimit-Limit-*: maximum requests allowed per time window (minute, hour, day).X-RateLimit-Remaining-*: how many requests are still available before hitting the limit.ratelimit-reset: time (in seconds) until the counter resets.

Developer reference

The GitHub repositories SAIA-Hub, SAIA-HPC and of Chat AI provide all the components for the architecture in the diagram above.

Further services

If you have more questions, feel free to contact us at support@gwdg.de.